Testing

If you got this far, you’re probably wondering if the effort is worth it and what kind of performance gains you can expect from using an NVMe configuration.

For the purpose of comparison, the SU900 256GB SATA and SX8200 Pro 256 M.2 NVMe mode should be considered typical of what you can expect of the two different SSD technologies in non-RAID configurations.

Due to bandwidth limitations of the SATA interface and the technology used in the SU900, it is not meant to compete with the XPG SX8200 Pro. The comparison is intended to demonstrate the difference from a typical SSD that most people might have in their system.

Testing Notes

- All results are the median actual results from at least 3 runs undertaken no less than 5 minutes apart. We could have gone for averages but we wanted to use an actual result from an actual run.

- The ADATA SU900 2.5″ SATA SSD was set to AHCI for all benchmarks.

- The ADATA XPG SX8200 Pro was tested as a standalone drive with the motherboard controller set to the default mode first as part of baseline testing and then tested again with the controller in RAID mode to see the performance impact of the different modes on a single drive.

- All test results are in the same position on the graph for consistency – the results are not ranked.

Understanding the Results.

There are a lot of numbers in the graphs with some displaying notable differences, whilst others show little variation across the different configurations. There is also reference to queues and threads in addition to the transfer speeds. Queues refer to the number of files and threads indicate how many simultaneous transfer operations.

To make these results a little easier to interpret I’ve listed some rules of thumb below:

- Sequential Read/Write with a small queue is a good indicator of a large file copy or game loading performance.

- Sequential Read/Write performance with a large queue indicates the performance to be expected when copying multiple large files.

- 4K/4KiB small files with small queue depth represents typical everyday system use

- 4K/4KiB small files with a large queue depth is what occurs when copying folders of files

- The variation in Threads can help to benchmark for multi-tasking environments.

Versions Are Important

The configuration tested is as follows and the exact versions are very important as your experience will almost certainly be different if using different versions of the driver, utilities or windows build.

| Windows 2010 Pro | Edition: 1809 OS Build 17763.615 |

| X399 ZENITH EXTREME | BIOS Version: 1701 |

| AMD RAIDXpert Driver | Driver Version: 9.2.0-00087 |

| CrystalDiskMark 6 (64bit) Benchmark | Version: 6.0.2 x64 |

| ATTO Disk Benchmark | Version 4.01.0f1 |

| AS SSD Benchmark | Version: 2.0.6821.41776 |

| ANVIL Storage Utilities | Version 1.1.0 |

Please note that there is a reported driver conflict between Windows 10 revision 1903 and older AMD RAID drivers for both SATA and NVME.

UPDATE 02-May-2020: I can confirm that updating the RAIDXpert2 version/driver to 9.3.0-000038 before updating windows to build 1909 was successful. The array that I setup here has been used on a daily basis since this article went live and the system has been 100% stable without any blue-screens or other stability issues.

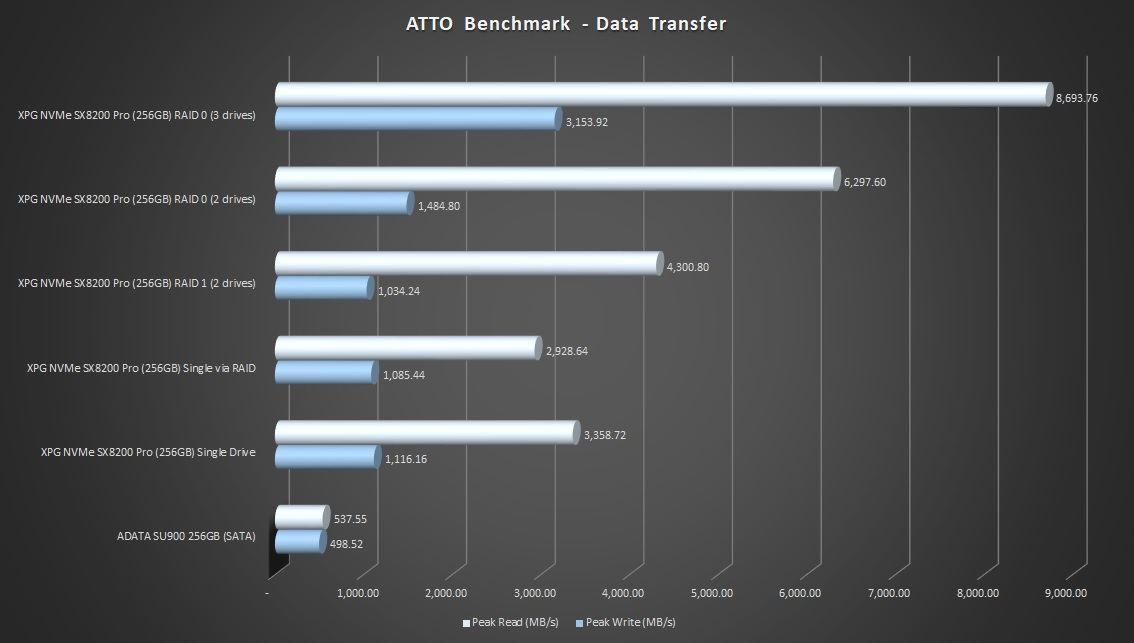

ATTO

We see that the single SX8200 pro in RAID mode operates slower than when reading in the normal (non-RAID) mode. This is a common observation across all tests, particularly in the write testing as there is overhead in writing the same data to both drives in the array.

Peak read speeds scale in an almost linear manner for the NVMe RAID arrays with RAID 0 (3 drives) hitting 8,693MB/s compared to the single performance of about 3,358 MB/s. These peak speeds are when processing larger sequential files.

Peak write speeds were less linear but still demonstrated a pattern of improved performance.

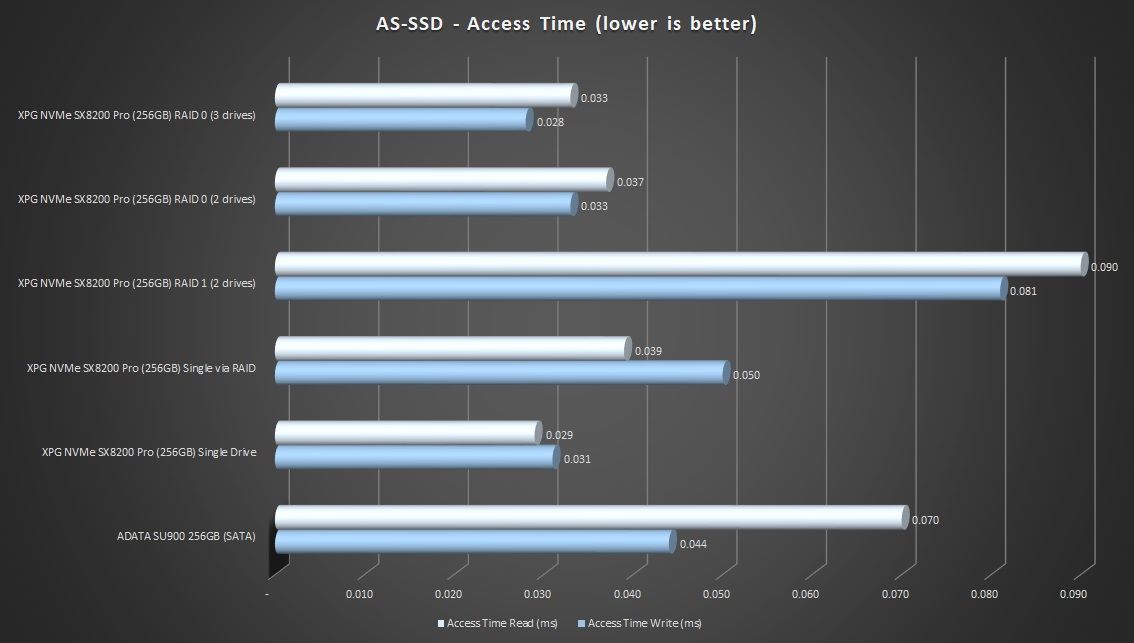

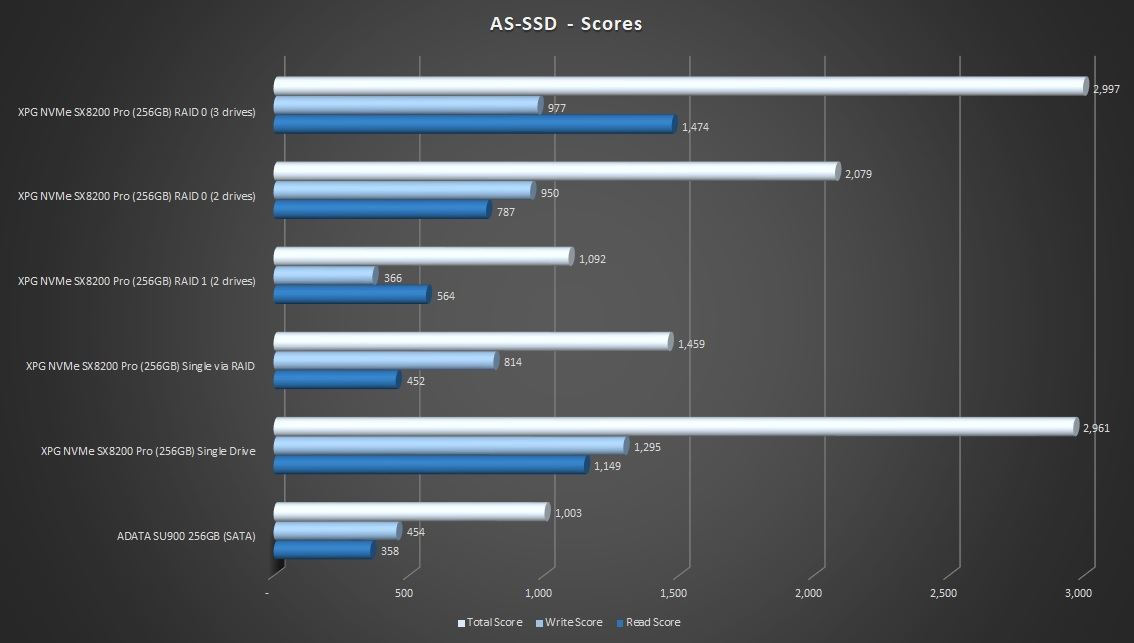

AS-SSD

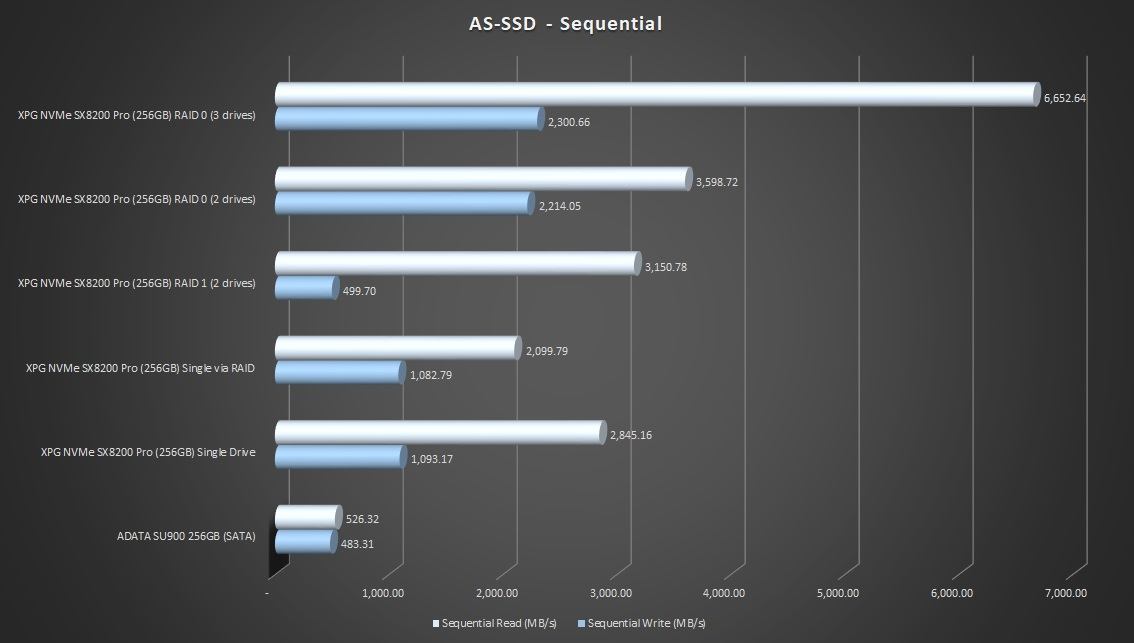

Once again, we see the single drive take a performance hit in RAID mode when compared to default NVMe.

There is a clear performance benefit for sequential reads and writes when moving to RAID 0 over a single NVMe configuration. Comparing the numbers to the SATA ADATA SU900 is almost like comparing SATA SSDs to mechanical hard drives. For large sequential files, the 3-drive RAID 0 configuration delivers more than double the performance of the single NVMe SSD.

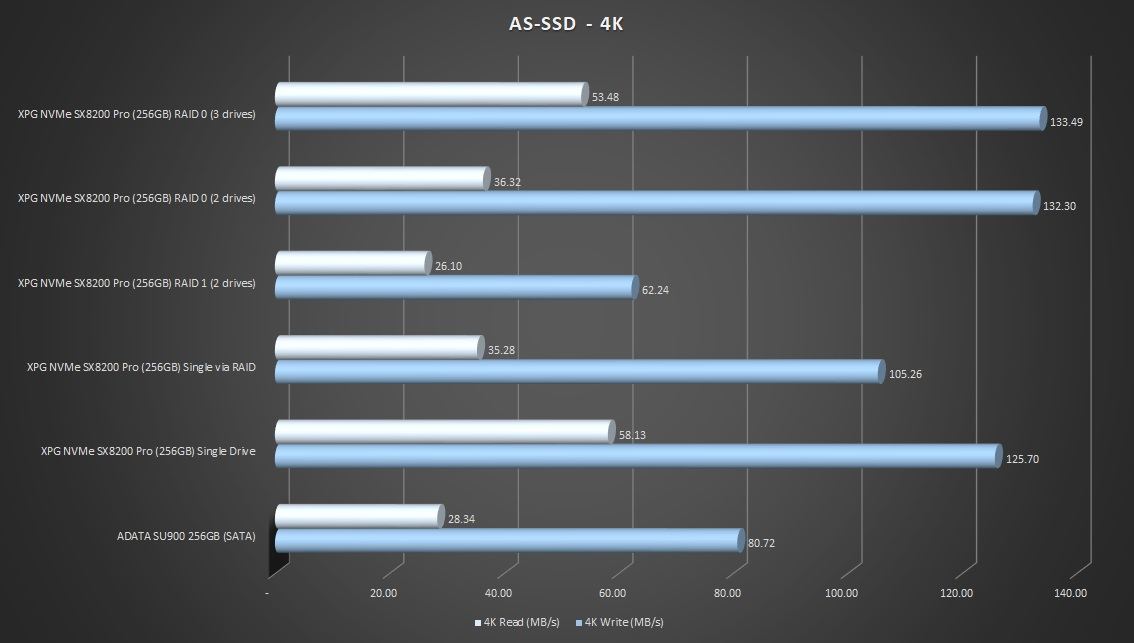

The 4K Random performance levels out the field a little more and the single NVMe performance actually delivered a better read result than any of the RAID arrays. RAID 1 takes a hit with the write performance in this test but the RAID 0 array is marginally better than the single NVMe SSD when writing data.

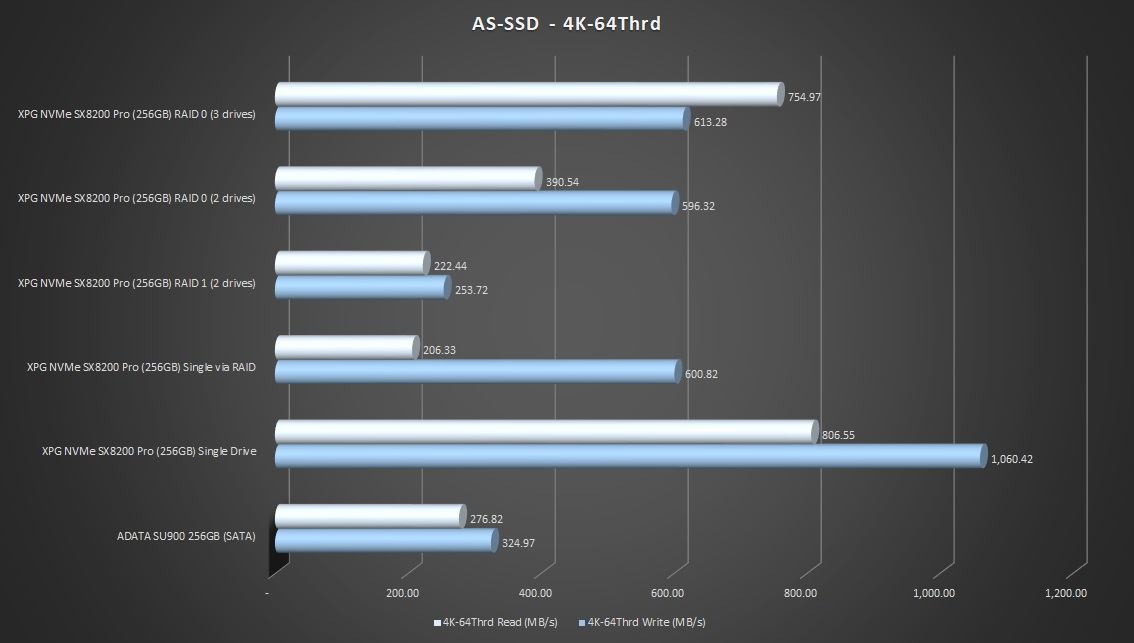

4K-64Thread performance doesn’t benefit from any NVMe RAID configuration – in fact it takes 3 drives in RAID 0 to match a single NVMe configuration. This result indicates that none of the RAID configurations provide any benefit for heavy multi-tasking scenarios with small files.

Access times are relatively close with the exception of RAID 1 which is the obvious outlier, performing worse than the SATA SSD.

The AS-SSD scoreboard has the triple NVMe RAID 0 configuration leading the way, but only just. When someone asks me “Is RAID 0 better than a single drive?”, my answer is always along the lines of “It depends” and this graph is a good illustration of why. Overall, the single drive in a standard NVMe configuration is close to that of three of the same model in RAID 0. You have to look at the Sequential and 4K results then consider the workload/use case to weigh up if the hassle and risk of RAID 0 are worth the return in terms of performance.

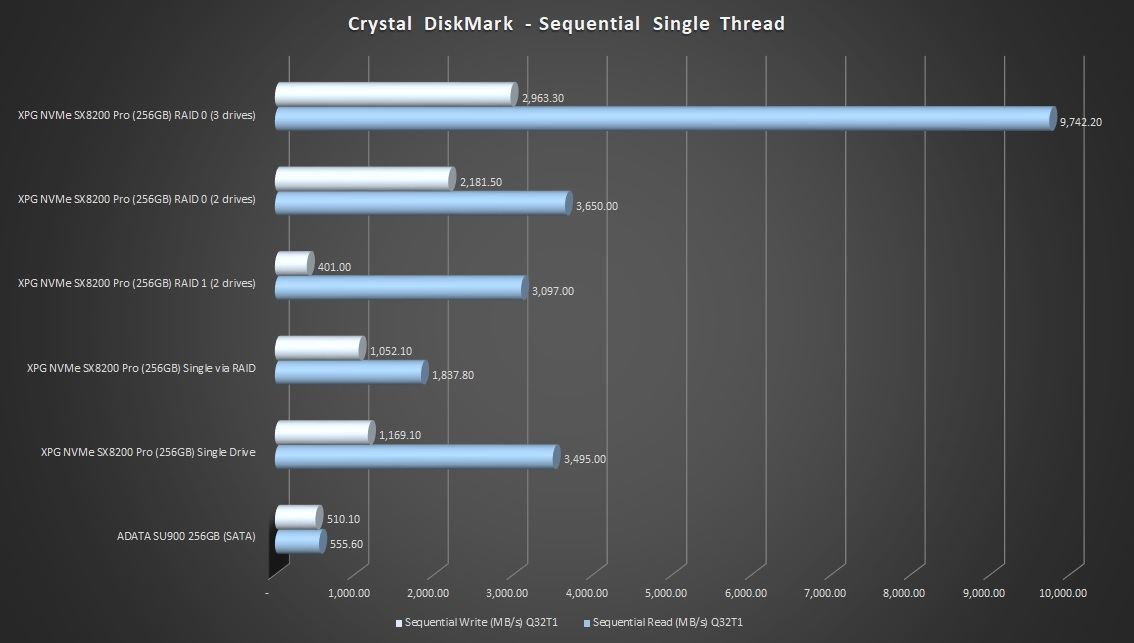

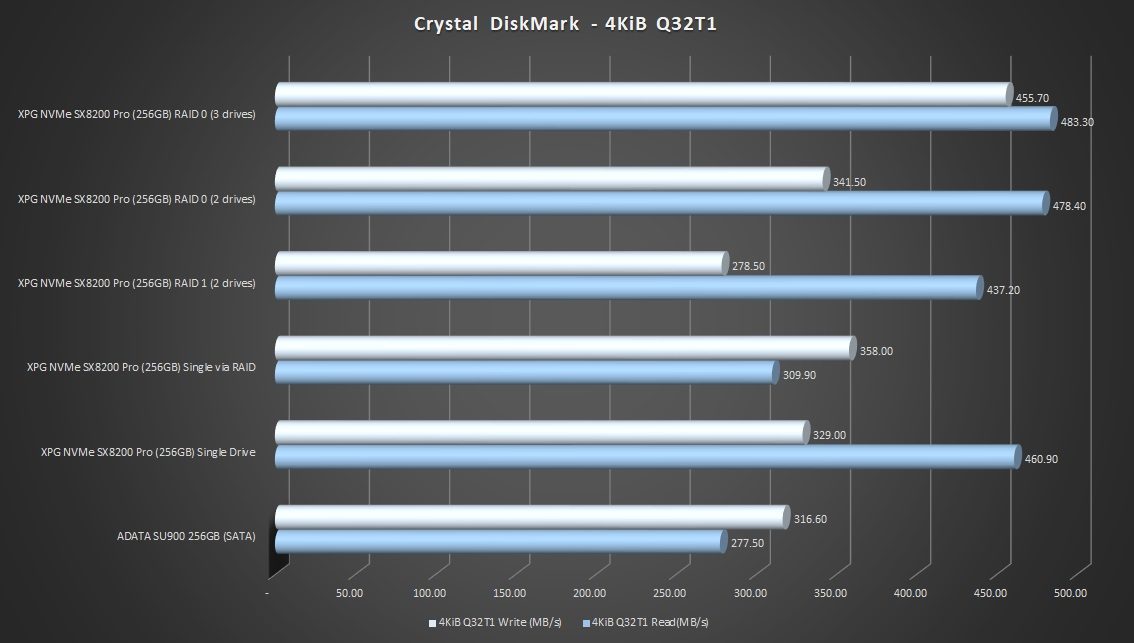

Crystal DiskMark

The Crystal DiskMark sequential test has the RAID 0 versus default NVMe performance of a single SSD worlds apart in what is the most contrasting benchmark of the entire testing phase. I ran the test several times to ensure it was accurate because 9,742MB/s was faster than I ‘d hoped or expected from the RAID 0 3-drive configuration.

The sequential write performance shows a linear improvement for the RAID 0 configurations. Whilst a dual-drive RAID 0 wasn’t faster in the sequential read test, and that result was perhaps a little disappointing, the write performance was almost double what we recorded from the single SX8200 Pro SSD.

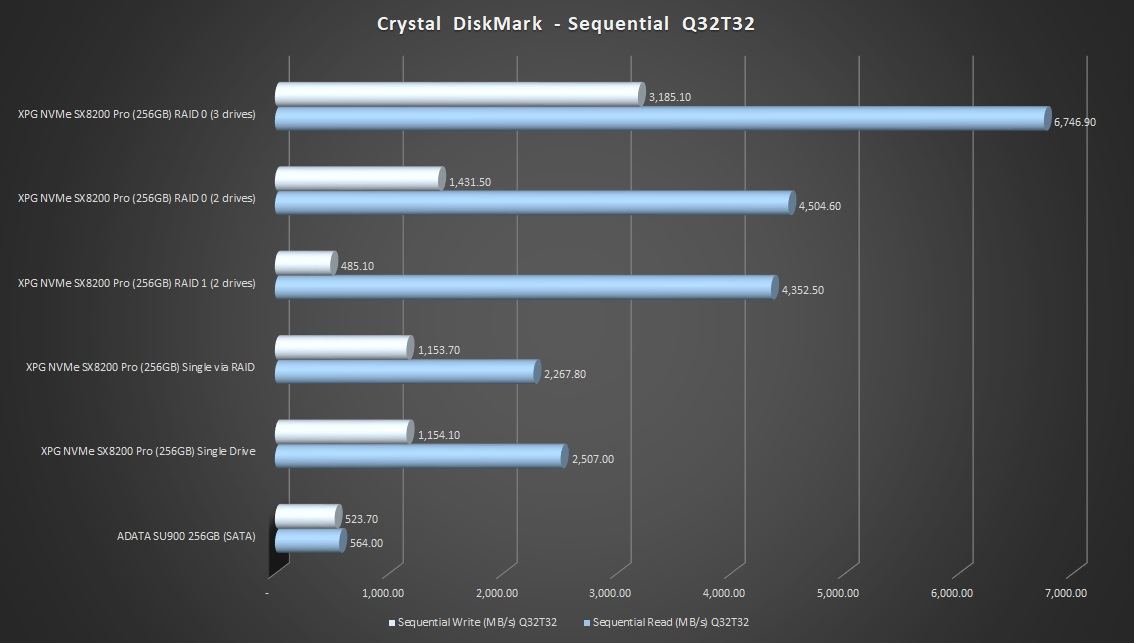

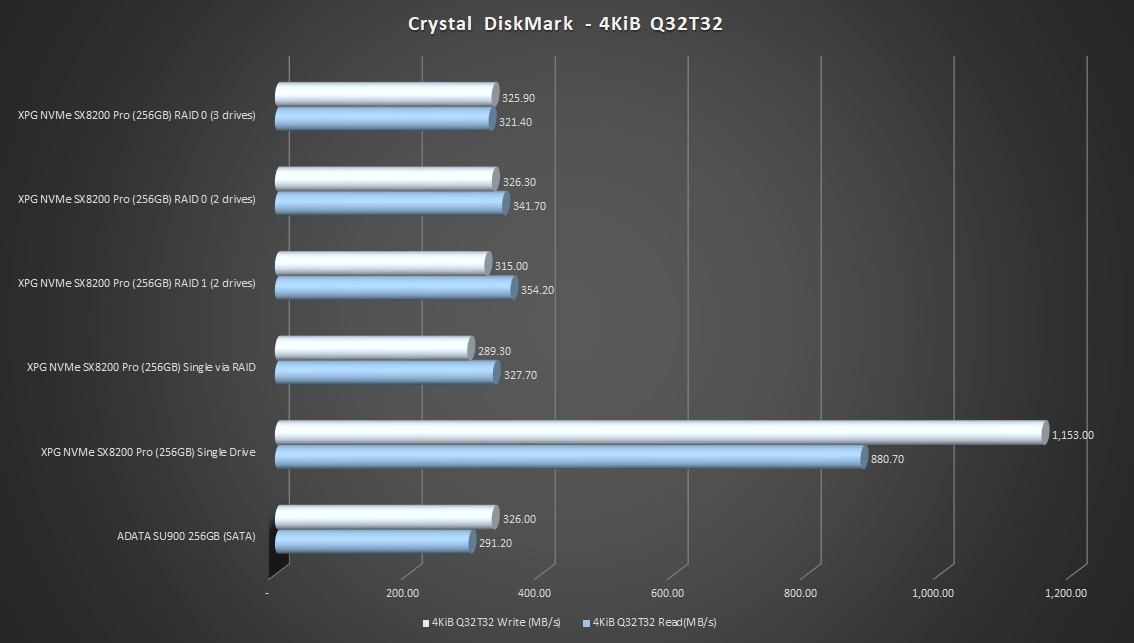

The above graph shows what happens when we queue up 32 files and choose 32 threads for the sequential data transfer. The additional bandwidth allows all the multi-drive RAID arrays to significantly outperform the single SX8200 Pro in read benchmarks. RAID 1 still takes a write speed penalty but the RAID 0 arrays take the points in write speed benchmarks in this test. The above two tests show the benefit of RAID 0 when it comes to loading or copying large files either in small numbers or in large volumes with a multi-tasking focus.

In a single threaded test with a large queue of small files, the field is much closer. The RAID 0 read performance was faster than a single drive but not by a large margin. The triple-drive RAID 0 configuration takes the points overall but it’s close and probably not close enough to justify the effort of implementing NVMe RAID for a typical system user.

This test is the same as the one above but with 32 threads in play and the result was the opposite to what I was expecting. In a heavy multi-tasking environment with many small files or folders the cache technology implemented on the single ADATA XPG SX8200 Pro performs noticeably better.

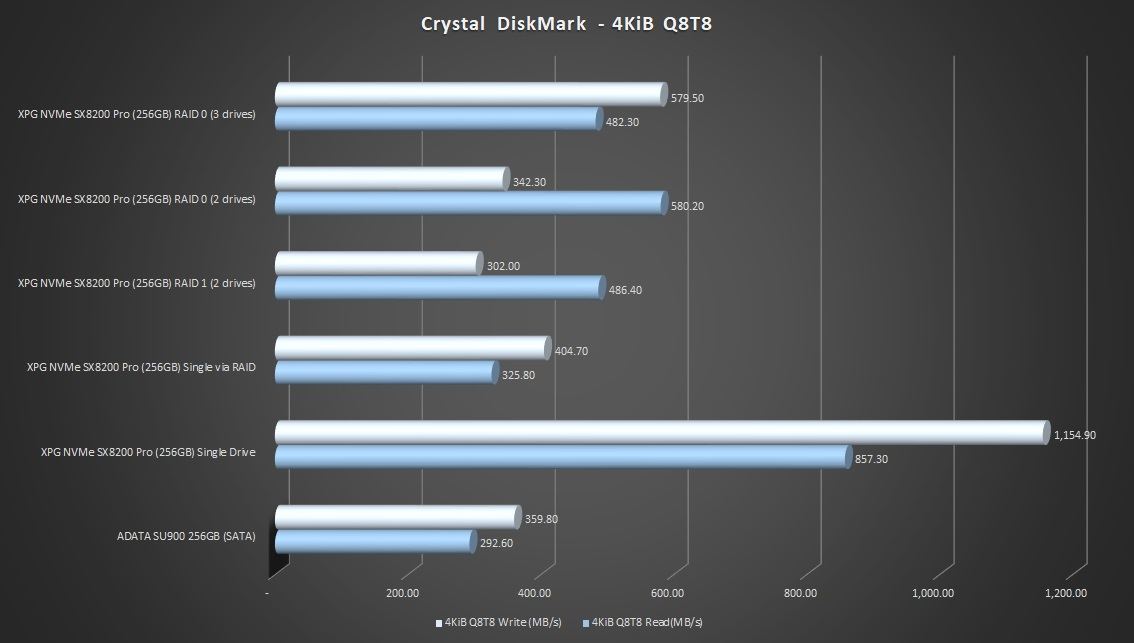

4K performance with a moderate queue depth and 8 threads showed the scaling of the RAID arrays but the single NVMe SX8200 Pro still convincingly led the way. RAID 1 performance was the slowest overall in terms of writes due to the overhead of effectively writing the data twice. Interesting, the dual-drive RAID 0 configuration delivered a better read result than the triple-drive setup.

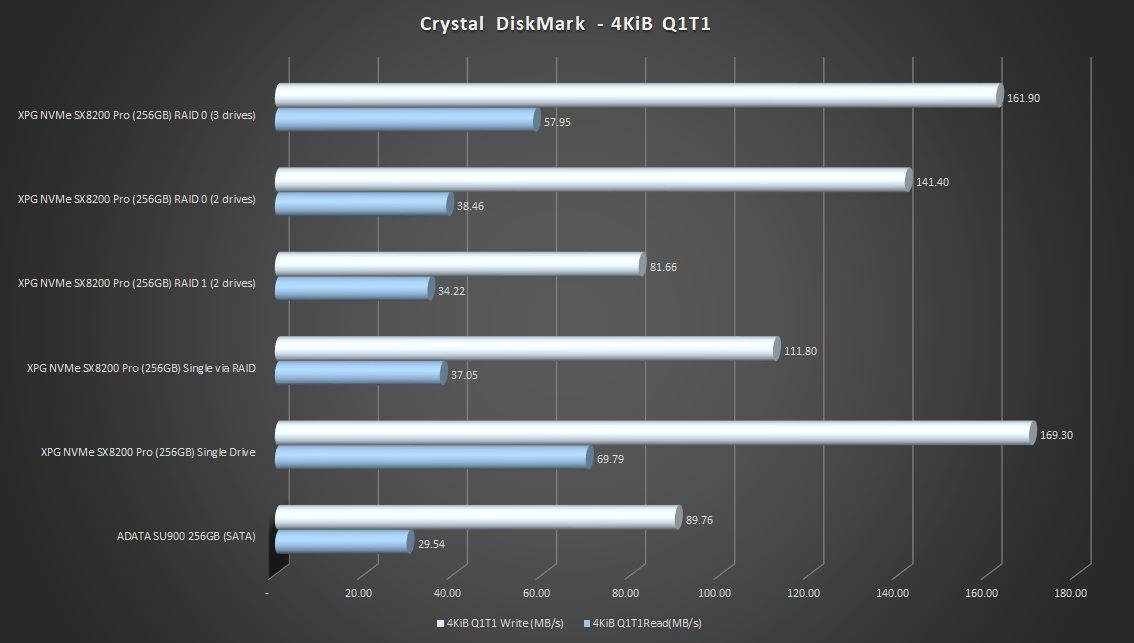

When it came to the 4K single file and single thread benchmark, there was no benefit in using a RAID solution over a single drive.

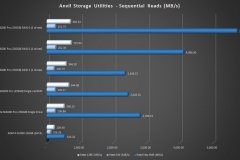

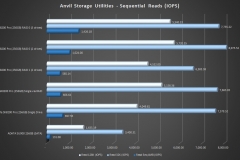

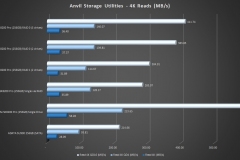

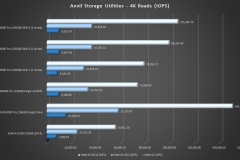

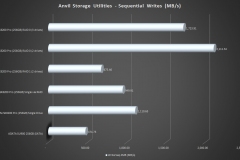

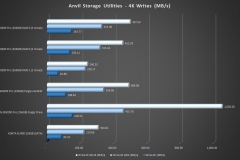

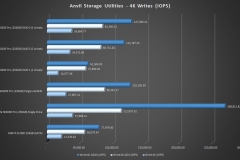

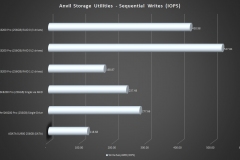

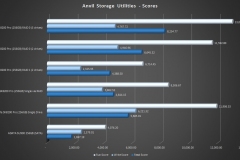

ANVIL Storage Utilities

There are a lot of benchmark results from the Anvil Storage Utilities test, some with MB/s and some measured in IOPS. The trend is aligned with the other benchmarks so I’ll summarise at the end.

Sequential read testing validated that the larger the files, the more benefit was available from RAID 0 over a single drive.

Sequential writes showed a clear benefit of configuring NVMe SSDs in a RAID 0 for larger files.

4K Read testing had the single SX8200 Pro in default mode notably superior to the 3 different RAID configurations that were almost even with each other. There was some visible scaling when the queue depth increased to 4 and then 16.

There was no clear benefit of RAID when it came to 4K writes either with the single NVMe SSD again clearly leading the way.

The final graph shows the overall Anvil scores with the 3xSX8200 Pro in RAID 0 with the highest run score of 13,022 but it should be noted that this was bolstered by the blistering sequential read scores.

Common Themes

When using a single drive, the default NVMe mode is the best for sure and the performance of a single drive in RAID mode is obviously held back by overhead of the controller – hence why RAID is disabled in BIOS by default. To be clear, I benchmarked the very same ADATA XPG SX8200 Pro unit in both default and RAID modes for the single drive testing so the only difference was the operating mode of the NVMe controller.

Sequential Read and write speeds can really benefit from RAID 0 and whilst the default NVMe single drive holds its own in the 4K tests, it loses out a lot to the RAID 0 configurations for that sequential data and it’s a big difference.

Let’s look at some raw numbers for a second in the sequential read/write testing. I’ll use the Crystal DiskMark Sequential test as the example.

- A SATA 3 SSD can read at 555MB/s

- A single XPG SX8200 Pro NVMe SSD can read at 3,495 MB/s

- Two SX8200 Pro SSDs in RAID 0 delivered 3,650 MB/s

- Three SX8200 Pro SSDs in RAID 0 knocked out 9,742 MB/s

When it comes to reading sequential data like program files, textures or large video files, a RAID 0 consisting of three NVMe SSDs can be almost 20 times faster than a SATA SSD. Even if everything else was equal (or close enough), that last RAID 0 number is impressive.

In general use, the benefit of RAID 0 was hit and miss but the key take away is that you can see some incredibly fast sequential read results from combining three NVMe SSDs in RAID 0.

If you’re not chasing that insane sequential read performance the additional cost and risk may not be worth the overhead and hassle of setting it up.

Real World Use

Productivity

Aside from the storage benchmarks, I also ran some Blender runs using the Classroom CPU benchmark file to see if there was any difference from our single NVMe drive benchmarks and the different RAID configurations. The file rendered in 7 minutes and 57 seconds on average with only a 1-2 second variation across all runs in all configurations.

The story was the same for the compression tests where I used a 2.3GB .tar archive containing 42,588 files in 6,246 folders. The archive extracted in 1 minute and 25 seconds regardless of the storage configuration. The 4K read/write performance looked more of a problem in the benchmarks but I didn’t see the scenario present an issue in real life.

AMD’s Threadripper 2950X processor has 16 cores / 32 threads and it would take much larger data volumes to see the benefits of AMD NVMe RAID for rendering or archival. Once these files are loaded into the 32GB of DDR4 in Quad Channel configuration that our test system is running, the storage isn’t the limiting factor

Gaming

The following list of games with their installation folder sizes shows how much data PC gamers need to load in 2019.

- World of Warships (33GB)

- The Division 2 (48GB)

- Fortnight (52GB)

- Star Wars Battlefront II (77GB)

- Elder Scrolls Online (78GB)

- Battlefield 1 (81GB)

- GTA V (83GB)

- Fallout 4 (94GB)

- Rainbow Six Siege (96GB)

Granted, the games don’t have to load all of that data at once and some open world games do a good job of concealing load screens by limiting the speed of player movement but those textures, assets and maps have to be loaded at some point.

I noticed that load times were much shorter for games when using RAID 0 with the three SX8200 Pro drives. When I switched back to a SATA SSD I noted that I had a lot more time to check my phone for emails or texts when waiting for games like ESO, Division 2 or Battlefield 1 to load. Once in the games themselves, titles like GTA V, Division 2, Fallout and Battlefield didn’t really feel any different but those loads screens were certainly shorter when using a RAID 0 storage configuration.

The 256GB ADATA XPG SX8200 Pro NVMe M.2 SSD I used can be bought for just $69-79 AUD which makes a 3-drive RAID 0 setup compelling value given the speeds seen in the sequential testing.

Side Note: I should probably apologise to the ADATA SU900 SATA SSD for including it in the graphs. It performed well against a superior class of storage and looked bad in comparison. The SU900 is a great SATA SSD but its inclusion shows the upgrade you can get from switching to NVMe from SATA.

Conclusion

NVMe RAID has it’s benefits and whilst it can drastically decrease the load times for large applications, projects and games we should also note that a single high-end NVMe SSD like the XPG SX8200 Pro can still feed CPUs data at a rate of 3,500 MB/s or so. This is impressive compared to SATA SSDs that top out around the 550MB/s mark or mechanical hard drives that might give us 100-150MB/s depending on the spec and where the data is located on the platter.

A single NVMe SSD doesn’t need any special drivers or consideration when installing it and is a very simple storage solution. In terms of cost, it’s usually a few dollars cheaper to buy a larger single drive than multiple smaller drives that total the same capacity. Also consider that if you max out your NVMe slots with smaller capacity drives and want to increase your fast storage later it’s more expensive and more involved to expand an array than simply adding another 1TB NVMe SSD to an empty M.2 slot.

RAID 1 demonstrated that there is a performance hit in terms of write speed and generally very little benefit in read speeds over a single drive despite it giving a system builder some protection against hardware failure. The performance penalty probably isn’t worth it unless the workstation is either mission critical or in a remote/hard to service location.

RAID 0, whilst having no tolerance for failure can deliver faster sequential read/write performance over a single NVMe drive of the same specification. Two drives are going to be better than one from a sequential performance perspective. As long as the data is either backed up regularly, expendable or transient like a cache, the risk can be justified.

The three-drive RAID 0 configuration recorded the fastest sequential benchmarks and proved to be seriously impressive when dealing with large sequential data like loading games. The scaling was generally in line with our expectations although the 9,742MB/s in Crystal DiskMark was a pleasant surprise.

After running these tests and using the different configurations over a month I can see the value of RAID 0. I’d be inclined to implement a RAID 0 of 3x1TB ADATA SX8200 Pro NVMe SSDs for my games library to minimise the load screens and giving me 3TB of very high speed storage. This data is all ‘expendable’ in my situation as it’s easily downloadable static game executables from Steam, Origin, Zenimax/Bethesda and Uplay. I also have a 4TB USB hard drive that I can use to regularly backup the library so it’s worth the risk.

It really comes down to what you want from your system and how you need to read the data – is it a lot of smaller random reads or are you typically doing large sequential reads? For average system users, the 4K test results are more relevant but gamers who want to reduce load times should be looking at the sequential tests where NVMe RAID 0 truly shines.

The 3,500MB/s speeds from a simple single NVMe SSD solution should keep most people happy and it’s the safe play. On the other hand, going RAID 0 isn’t scary, nor is it difficult. You simply need to research the pros and cons, mitigate the risks, read the instructions, go for it and don’t look back.

Can you test this with 1TB drives? Think it would be faster?

Good review

Thank you for your instruction and test. I too would like to see with 1TB. My 1950X needs this 😀

Interested in Cache settings, what would be recommended for NVME and SSD?

This is an excellent review!!! As good as it gets!!!!

Thank you!!!!

i had some problem with The central M.2 socket is under the chipset heat sink, my samsung 970 pro nvme get too hot and the machine restart……so im just using the DIM2 for my M.2 ssd.

Hi – what is your full system spec?

I’ve used this test platform in a Thermaltake View 71 case with good airflow and more recently in an In-Win 303 with less effective airflow and a RADEON VII graphics card. I haven’t had any issues with the SSDs getting hot so I’d be interested to know what else you are running with your setup.

ok i have an NZXT kraken x73 on the top of the case, 1900x processor, 4 dim ddr4 gskill trident Z rgb CL14 3200 mhz, 2 front fan noctua 140 mm industrial 3000 rpm, a 120 noctua fan for the back case, a 950 pro ssd 512, and a mechanical HDD segate 4 tb. a RX5700xt, the 970 pro m.2 nvme, a power supply Seasonic Prime titanium 1000, and the case suppresor F31 ThermalTake, my monitor an AOC E2250SWDN, AND steinberg audio card UR 22mk II, 2 speaker m-audio bx5a and a canon printer E401….thats all

I can’t see anything there that would be an issue. You should have good airflow with that fan setup and you’re not running multi GPU so I’d expect the heat to be well under control.

The DIMM.2 slot is more accessible and is also in probably the coolest part of the board.

I’ve been running this RAID setup with the ZENITH as documented in the article for about a year. I had to change the Enermax TR4 AIO cooler out because it gummed up so there’s a Noctua NH-U12S TR4-SP3 fitted now. The RAID0 array and all SSDs have been perfect without any issues at all with almost daily use.

Maybe my motherboard have an issue in that m.2 socket, I think there is a problem with that socket because when I put the m.2 it remains a bit loose until the screw is put in, it is not like the Dim2 socket that goes in tight. but i have to make a knew proof buying another m.2 and making the proof…

phil i want to configure this as good as i can….but i dont have a RAID configuration….i want to get the best of this in the bios configuration….any recomendation will be good…..and other thing….i need to change my monitor and was looking for one that have DP 1.4 and HDMI 2.0 like my video card asus rog 8c RX5700XT….. BenQ EX2780Q (27″ 144Hz IPS, 2560 x 1440)

I have found good references on this monitor, but I have seen many negative comments on the sales pages that allude that it is more advertising than anything else,

I am looking for a relation of price performance, that does not affect me much the pocket,

i wanted the RADEON VII for this setup but was imposible to me to get it……too spensive….

now im thinking on using a alpha dim2 that came with the heatsink i think it would work perfectly…

Great article, as NVME raid setup documentation is few and far between out there. We are working on a video right now basically talking about the same thing using 4 1TB Intel M.2 SSD with a controller card on an AMD x570 chipset motherboard. I got everything to work kinda of easy, but then as you mentioned I lost windows10.

I was trying to change the drivers just like you were talking about because Win would not put them back to regular drives so I could test the drive as a volume, not as raid…..plus I was testing putting making 2 drive combos and different arrays and blue screen of death hit me a bunch, finally rendering windows useless and requiring a new install.

I’m in the process of creating backups and images of windows in case this happens again and so I can continue my testing….But I wanted to mention that from my benchmarking when it was all running; it pretty much matched a lot of your data on using single NVME m.2 vs RAID, especially when they are now going at the same speed with PCIe 3.0 speeds or 4.0 if your drives are 4.0.

Just like your very well written article pokes around, I’m question the usefulness of a RAID 0 as an everyday hard drive for video editing in Davinci Resolve.

My question to you is this….When running your RAID 0 have you had any drive failures or anything happen that would sway you away from using RAID 0?

I have been running the same setup since the article went live and I haven’t had a single issue or any failed drives. The M.2 locations on the X399 Zenith are out of the hot zones so they won’t get cooked but this doesn’t make them immune to random failure. The rig is setup to boot from a 2.5″ SATA SSD so I can still try to roll-back any updates that conflict with the RAID driver and I don’t keep anything on the RAID 0 array that isn’t backed up so for me it’s low risk and low impact if I have a problem.

I haven’t had anything happen that would sway me away from AMD NVMe RAID specifically but there is one downside that I can see – upgrading. I have 3x256GB SSDs in the array on the X399 Zenith, using up all available M.2 slots. This was done as a proof of concept. If I want to upgrade it, I’ll have to buy 3 larger SSDs and rebuild the array.

All up, it works well and my top recommendation is NOT> to use it as a system drive – standard SSDs are going to be fast enough for most people as a boot drive anyway. Keep the important elements of your rig simple.

Did you configure/test TRIM in the RAID setup? I’m trying to see if it is possible to configure TRIM on NVMe AMD RAID.

Hi Sam – Good question.

When I did this project there was little detail available regarding TRIM for AMD NVMe RAID. Most of the material I found indicated that TRIM wasn’t supported back then so I didn’t configure and test that element. The array was setup per official guidelines from both AMD and ASUS to go through the recommended/supported approach and show the results that we could expect. Now that you’ve raised the question I’ll have to add it to the list of things to revisit 😉

Did you ever figure out if Trim worked in this setup? I’m finding conflicting information all over the place!